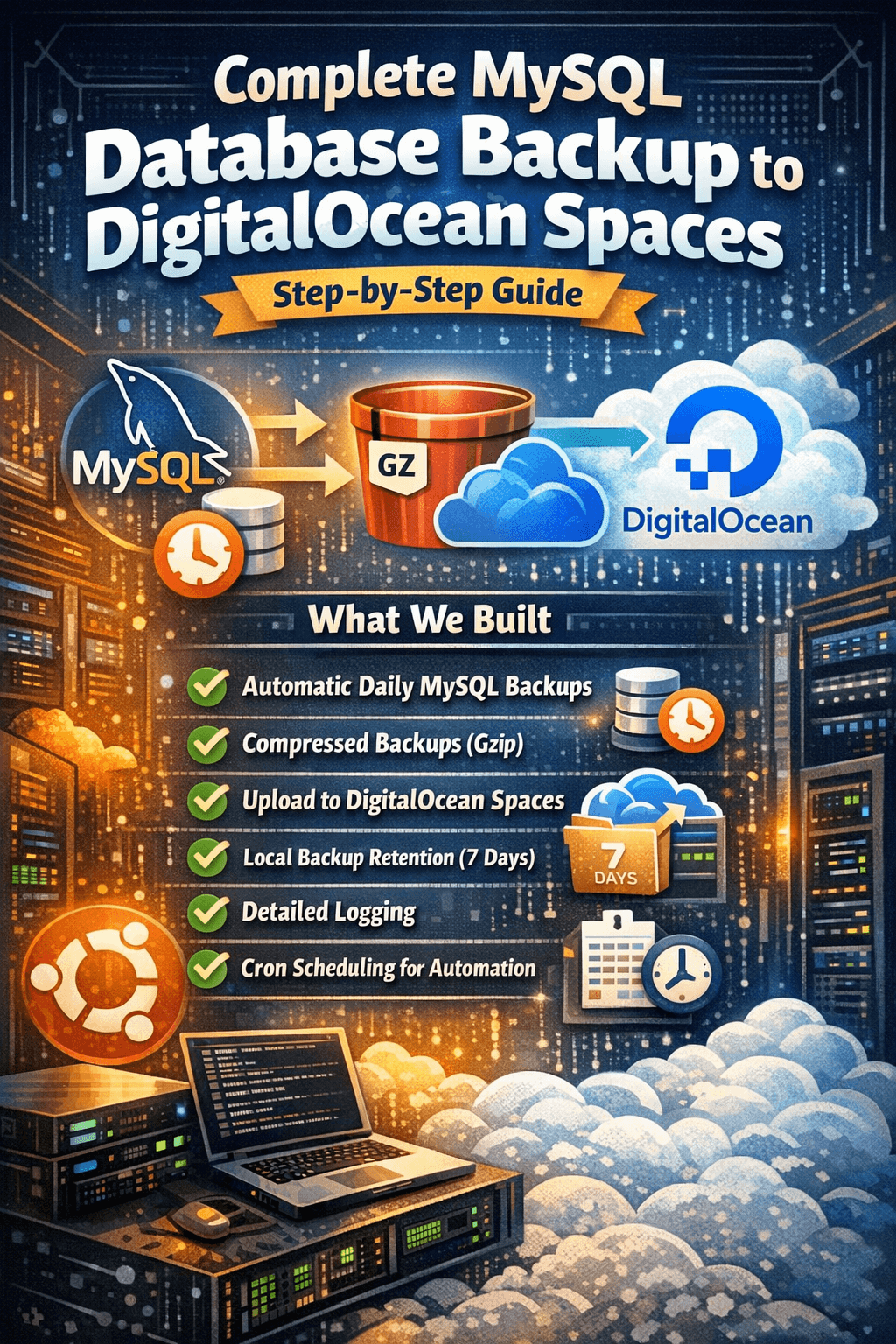

Easiest way to setup Automated MySQL Database Backup to DigitalOcean Spaces or s3 compatible buckets (With Retention & Logs) on Ubuntu server.

Complete MySQL Database Backup Setup to DigitalOcean Spaces - Step-by-Step Guide What We Built

• ✅ Automatic daily MySQL database backups

• ✅ Compressed backups (gzip) to save space

• ✅ Upload to DigitalOcean Spaces (S3-compatible storage)

• ✅ Local backup retention (7 days)

• ✅ Detailed logging

• ✅ Cron scheduling for automation

Step 1: Install AWS CLI Since DigitalOcean Spaces is S3-compatible, we use AWS CLI:

plaintext

# Download and install AWS CLI v2

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "/tmp/awscliv2.zip"

unzip -q /tmp/awscliv2.zip -d /tmp

/tmp/aws/install

# Verify installation

aws --version

# Output: aws-cli/2.33.7 Python/3.13.11 Linux/6.8.0-55-generic exe/x86_64.ubuntu.24Step 2: Create Directory Structure plaintext

# Create directories

mkdir -p /root/backup-scripts

mkdir -p /root/db-backupsStep 3: Create the Backup Script

plaintext

touch /root/backup-scripts/mysql-backup-spaces.sh plaintext

#!/bin/bash

# Database Configuration

DB_USER="root"

DB_PASS="db_pass" # Your MySQL password

DB_NAME="db_name" # Your database name

BACKUP_DIR="/root/db-backups"

DATE=$(date +%Y%m%d_%H%M%S)

BACKUP_FILE="${BACKUP_DIR}/${DB_NAME}_${DATE}.sql.gz"

# DigitalOcean Spaces Configuration

SPACE_NAME="bucket-name" # Your space name

SPACE_REGION="blr1" # Your region (nyc3, sgp1, sfo3, fra1, blr1)

SPACE_ENDPOINT="https://blr1.digitaloceanspaces.com"

SPACE_PATH="backup-folder" # Folder in your space

# DigitalOcean Spaces Credentials

export AWS_ACCESS_KEY_ID="Your_access_id"

export AWS_SECRET_ACCESS_KEY="Your_access_key"

# Create backup directory if not exists

mkdir -p ${BACKUP_DIR}

# Create backup

echo "[$(date)] Starting backup of database: ${DB_NAME}"

mysqldump -u${DB_USER} -p${DB_PASS} ${DB_NAME} 2>/dev/null | gzip > ${BACKUP_FILE}

if [ $? -eq 0 ]; then

BACKUP_SIZE=$(du -h ${BACKUP_FILE} | cut -f1)

echo "[$(date)] Backup created successfully: ${BACKUP_FILE} (${BACKUP_SIZE})"

# Upload to DigitalOcean Spaces

echo "[$(date)] Uploading to DigitalOcean Spaces: ${SPACE_NAME}/${SPACE_PATH}"

aws s3 cp ${BACKUP_FILE} s3://${SPACE_NAME}/${SPACE_PATH}/ \

--endpoint-url=${SPACE_ENDPOINT} \

--region us-east-1 \

--acl private

if [ $? -eq 0 ]; then

echo "[$(date)] Upload successful to DigitalOcean Spaces"

else

echo "[$(date)] ERROR: Upload to Spaces failed"

exit 1

fi

# Delete local backups older than 7 days

find ${BACKUP_DIR} -name "*.sql.gz" -mtime +7 -delete

echo "[$(date)] Old local backups cleaned up (kept last 7 days)"

echo "[$(date)] Backup process completed successfully"

else

echo "[$(date)] ERROR: Database backup failed"

exit 1

fiStep 4: Make Script Executable plaintext

chmod +x /root/backup-scripts/mysql-backup-spaces.shStep 5: Test the Script We tested it manually first:

plaintext

/root/backup-scripts/mysql-backup-spaces.shTest Output:

plaintext

[Tue Jan 27 19:11:14 UTC 2026] Starting backup of database: test_db

[Tue Jan 27 19:11:15 UTC 2026] Backup created successfully: /root/db-backups/test_db_20260127_191114.sql.gz (2.2M)

[Tue Jan 27 19:11:15 UTC 2026] Uploading to DigitalOcean Spaces: developerbook/backup-folder

upload: db-backups/test_db_20260127_191114.sql.gz to s3://developerbook/backup-folder/test_db_20260127_191114.sql.gz

[Tue Jan 27 19:11:21 UTC 2026] Upload successful to DigitalOcean Spaces

[Tue Jan 27 19:11:21 UTC 2026] Old local backups cleaned up (kept last 7 days)

[Tue Jan 27 19:11:21 UTC 2026] Backup process completed successfully✅ Success! 2.2MB compressed backup uploaded successfully.

Step 6: Setup Automatic Daily Backups We configured a cron job to run daily at 2:00 AM:

plaintext

# Add cron job

echo "0 2 * * * /root/backup-scripts/mysql-backup-spaces.sh >> /var/log/mysql-backup.log 2>&1" | crontab -

# Verify cron job

crontab -l

# Output: 0 2 * * * /root/backup-scripts/mysql-backup-spaces.sh >> /var/log/mysql-backup.log 2>&1Key Features Implemented 1. Compression

2. Automatic Cleanup

3. Error Handling

Checks if backup creation succeeded

Checks if upload succeeded

Exits with error codes for monitoring

4. Detailed Logging

5. Security

Monitoring Your Backups View Backup Logs

plaintext

# View all logs

cat /var/log/mysql-backup.log

# Follow logs in real-time

tail -f /var/log/mysql-backup.log

# View recent logs

tail -20 /var/log/mysql-backup.logCheck Local Backups

plaintext

ls -lh /root/db-backups/Verify Uploads to Spaces

plaintext

aws s3 ls s3://bucket_name/folder_name/ \

--endpoint-url=https://blr1.digitaloceanspaces.com \

--region us-east-1Customization Options Change Backup Schedule

plaintext

# Edit cron job

crontab -e

# Example schedules:

# Daily at 3:30 AM: 30 3 * * *

# Every 6 hours: 0 */6 * * *

# Twice daily: 0 2,14 * * *Change Retention Period In the script, modify:

plaintext

# Keep 30 days instead of 7

find ${BACKUP_DIR} -name "*.sql.gz" -mtime +30 -deleteMultiple Databases plaintext

DB_NAMES=("database1" "database2" "database3")

for DB_NAME in "${DB_NAMES[@]}"; do

# backup each database

doneRestore Process To restore a backup:

plaintext

# Download from Spaces

aws s3 cp s3://bucket_name/bucket-folder/test_db_20260127_191114.sql.gz /tmp/ \

--endpoint-url=https://blr1.digitaloceanspaces.com \

--region us-east-1

# Restore to database

gunzip < /tmp/test_db_20260127_191114.sql.gz | mysql -u root -p test_dbSecurity Best Practices 1. Protect Script Permissions:

plaintext

chmod 700 /root/backup-scripts/mysql-backup-spaces.sh2. Use Environment Variables:

plaintext

# Create credentials file

cat > /root/.backup-credentials << 'EOF'

export AWS_ACCESS_KEY_ID="your_key"

export AWS_SECRET_ACCESS_KEY="your_secret"

export DB_PASS="your_mysql_password"

EOF

chmod 600 /root/.backup-credentials

# Source in script

source /root/.backup-credentials3. Enable Spaces Access Logging in DigitalOcean control panel